Let’s talk about the performance characteristics of the EMC DataDomain and the IBM ProtecTIER. I had the privilege for some months to have both an EMC DD890 and an IBM TS7650G onsite. Both are close to top of the range and were connected via multiple 8 Gbps fibres – it doesn’t get much better than this.

I blogged before that, in my tests, the ProtecTIER was slower than the DataDomain. I suppose that’s fighting talk, so here’s how I get to that conclusion.

Simulating a backup

What we managed to do is to generate a dummy bytestream that we send via TSM to the device like so:

dsmBeginTxn(handle);

dsmSendObj(handle, stBackup, NULL, &objName, &objAttrArea, NULL)

for (i = 1; i <= numblocks; i++)

{

dsmSendData(handle, &dataBlkArea);

sz += 2 * 1024 * 1024;

}

dsmEndSendObj(handle);

dsmEndTxn(handle, DSM_VOTE_COMMIT...);

This way we don’t have to read data off disk when we back up, or write data to disk when we restore.

Next we can make our data (pseudo)random, by setting up the first dataBlkArea from above like so:

for(i = 0; rhubarbrhubarb...; i++)

{

int j = (random() % sz) - 1;

area[j] = (random() % sizeof(long));

}

Subsequent blocks are just permutations of this first block.

Throughput

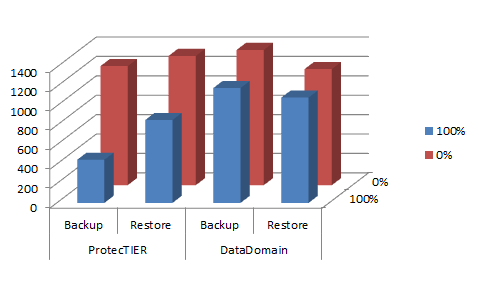

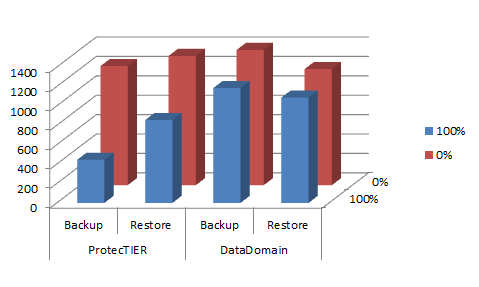

When we set our “random” parameter to 0%, and we back in 8 streams, each object being 8 GB in size, the DataDomain backs up at 1.4 GB/s and the ProtecTIER at 1.2 GB/s.

When we set it to 100% we get 1.2 GB/s on the DataDomain but only 450 MB/s on the ProtecTIER. Also, the ProtecTIER recovers faster than it backs up, but it’s still significantly slower with our 100% “random” setting.

In trying to figure out what happened here, I think we need to go back to the basic theory of deduplication. I found these two excellent papers on the DataDomain and the ProtecTIER.

Identity vs Similarity based deduplication

The DataDomain is an identity based deduplication device. That means, when it inspects the incoming stream of data it tries to find exact matches. If it finds a match it stores only a “pointer” to the match. Because we’re looking for exact matches the segments are typically smaller, the index becomes larger and resides on disk.

The ProtecTIER is a similarity based deduplication device. That means, when it inspects the incoming stream of data it tries to find similar data, not exact matches. It then finds the difference between the incoming segment and the matching segment and stores a “pointer” + the difference. That means we can have much bigger segments, and a smaller index. In fact the ProtecTIER (Diligent before being bought by IBM) was designed from the ground up to address 1 PB of data with a 4 GB index. However, because we have to do a byte wise comparison on each incoming segment to find the difference, we need to read it all back from disk.

As it turns out, both approaches have disk contention issues.

The DataDomain tries to mitigate the disk problem in three ways:

1. In memory index summary

Incoming segments are fingerprinted (using a hash function such as SHA-1) and the fingerprint is added to a Bloom filter which we can use to do a very quick search. If no match is found using the Bloom filter, there is definitely no matching segment to be found in the segment index on disk. Otherwise there’s a good possibility we have a matching segment and we have to go to disk to find it.

2. Exploiting spatial locality

When a backup is run a second time there’s a good probability the data is still in the same sequence. Even if you added, changed or deleted blocks, most of the segments will be in the same order as before. That means, if the DataDomain finds a match, chances are that the match has neighbours which will match the incoming segment’s neigbours.

The DataDomain tries to exploit this spatial locality and calls it stream-informed segment layout (SISL).

3. Caching

Searching through fingerprints is hard because of their random nature. The DataDomain uses the same spatial locality idea in its caching algorithm.

These evasive actions apparently avoid 99% of all disk IOs.

From my investigations, it appears the ProtecTIER tries to mitigate the disk contention issue in …, uhm, 0 (zero) ways. Here’s what I found:

The ProtecTIER gateway needs two disk pools. One is a small pool for meta data and needs to be fast. They ask for RAID10. In this one they maintain the state of the VTL and do all kinds of housekeeping. The other is the big user data pool.

The 4 graphs below show the behaviour of the two pools under various loads. The two graphs on the right hand side show the latency and it’s clear there’s no issue there (this disk is all sitting on IBM XIV behind IBM’s SVC).

The 2 graphs on the left show IOPS for the meta data pool (top graph) and for the user data pool (bottom).

We ran a backup (8 streams, 1 large 8 GB object in each) just after 09:00 with our random parameter set to 0%. It finished around 09:05. You can see we did up to 675 write IOPS to meta data and some IOs on the user data.

We now restore those same objects in 8 streams starting at 09:07 and finishing just after 09:10. Very little IO on the meta data and some reads on user data.

Here’s the clincher: We now run a backup (8 streams again, 1 large 8 GB object in each) just after 09:10 with our random parameter set to 100%. Now we only finish 14 minutes later and did a lot more reading off the user data (up to 5500 IOPS). However, the number of IOs to the meta data pool stays the same (the area under the graph is the same).

Lastly we restore those same objects and see massive reads off the user data pool.

Conclusion:

the degree of “randomness” has no effect on the meta data pool

Let’s call this switchedfabric’s first law of why the ProtecTIER gateway has disk issues.

switchedfabric’s first corollary on that:

You can’t speed up your backups in the face of more random data

by making the meta data pool faster.

It’s the user data pool that needs to be fast. It also needs to be big. It also needs to be affordable.

Storage sales persons love to tell you that you can have any two of those, but not all three.

Your explanation is very good. The Diligent product was also designed from the beginning to insist on byte to byte comparison. If you read towards the end of the Protectier paper, you will see that if you dont insist on such a comparison, you can actually merge the qualities of the two approaches.

You can use similarity based de-dupe to have a tiny index, but compute and store on disk the hashes at minimal computational cost. That way you

can just read the hashes off the disks, rather than the data itself, this should generate just a fraction of the disk activity. Unfortunately i dont think anyone implemented such a hybrid approach.

Note: I am not currently affiliated with either Diligent/IBM nor Data Domain.

eitan bachmat

This comparison would also highly depend on the setup of TSM. Was VTL used for ProtecTIER and NAS for Data Domain? In case of VTL, performance can be hugely boosted by TSM tweaking.

It would also be interesting if you describe the restore speeds. Many companies we visit have horrible DD restore performance of around 30/40 MBps.

Hi Tom,

The setup was like for like, VTL on both and same everything, all driven from one TSM instance. We did indeed restore also and our results are in the post, PT recovering faster than DD but the same behaviour under more random load.

Hey,

the problem here is that the best practice settings for ProtecTier

say that you need to have a minimum of 32 parallel streams to

accomodate best performance, whereby using only 8 streams may

bring up slower performance figures.

rgds

How much disk array you had behind ProtecTIER? Did you have the same number of spindles you had in DD? That is a BIG factor my friend!

Hi Eddy,

Very valid point. The PT had 20 TB assigned to it from an 8 node SVC cluster (CF8), the backend disk being on XIV (Gen 2). It was specifically sized by IBM. So that’s 180 spindles behind 2 layers of cache (120 GB on XIV, 192 GB on SVC). The DD had 32 spindles of which 4 were spares.

The performance I’m less worried about though, it is the correlation between the, call it degree of randomness of the data, and the performance of the user data pool.

This is a design issue on the PT. My argument is this: the more random the data, the more work the PT has to do the deduplicate, the more IOs to the user data pool – not the meta data pool. You can only make the PT faster by improving the performance of the (large) user data pool, the (small) meta data pool has no effect.

In the face of very random data, you simply can’t afford the PT.

Another serious issue which was not that relevant at the time but is today (for us and very likely an increasing number of customers), is that the PT can not distribute its segment processing to the clients like the DD can. This is because the PT does similarity based deduplication and needs the segment from the client together with a match from its own pool to do a diff.